How SEO might thrive under Bard and Prometheus

Last week, Google and Bing introduced the most significant change to Search so far: Bard and Prometheus. They don't just introduce a new era of search but also the first big opportunity Bing has to gain more than its 6% market share. Microsoft seemingly scheduled its announcement intentionally one day before Google's and did a great job presenting an exciting vision for AI Search and Edge. Google, on the other hand, delivered a confused presentation and published a press release with hallucinated results that cost the company -$100B in market cap after its stock dropped by -7%.

Microsoft and Bing were not the first to integrate chatbots with Search in that way (it was Neeva, You and Perplexity AI), but now we have more clarity about "how the big ones do it" and how Search changes as a result.

The next logical question is what the impact of chatbots in Search will be.

Why sites might still get SEO traffic amid AI chatbots

Both Google and Bing pointed out that traffic to the web is important, but we don't have any concrete data about site clicks from AI results. The best way to tackle the question of how much traffic sites still get is to invert it: "What would it take for users to not click on web results when given an AI answer?"

From experience, we know searchers care mostly about three factors:

Speed

Satisfaction

Completion

AI answers need to be faster than it takes users to click on web results to get an answer. Web users are not willing to wait a long time for results. One piece of evidence for the criticality of speed is that Google still shows how long it took to deliver the search results.

Will Google make speed a competitive advantage for Bard results?

We don't know!

But I'm not the first one to think about speed and AI answers. Chat GPT is fairly slow but also powerful enough to make users wait.

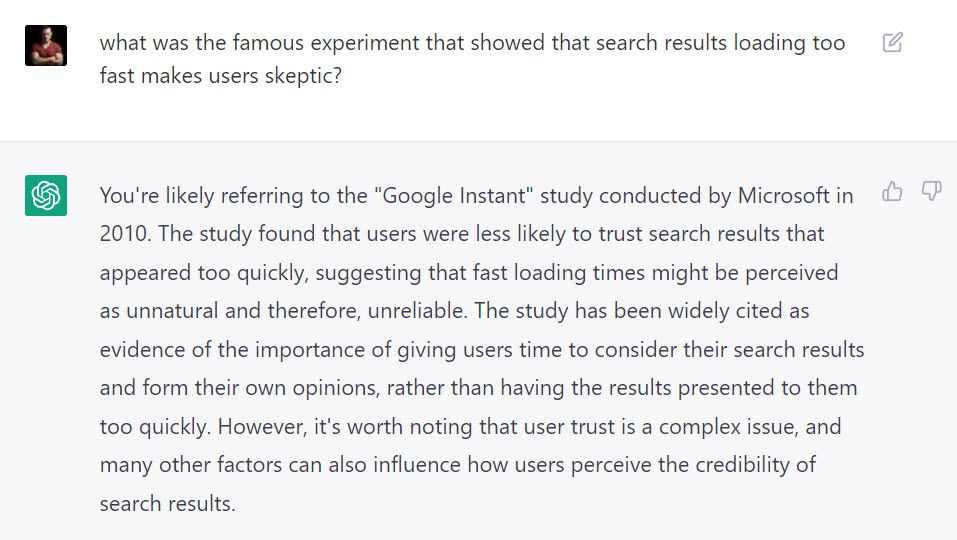

One way to get AI answers in Search faster is by basing them on page summaries. However, if speed optimization comes at the cost of accuracy or relevance, users quickly lose trust in the results. Experiments have shown that users are skeptical when results load too quickly. They need to perceive the effort going into the output.

Satisfaction is a product of accuracy and relevance. Search results need to be correct and make sense in the context.

A big problem with Search right now is that the longtail results quality is about as good as Google's naming skills... subpar. As soon as queries become longer than 6-7 words, the results are overwhelmingly irrelevant (see below).

That's a problem AI chatbots don't have. Their LLM capabilities allow them to give very specific answers. In fact, Bing reported a massive jump in relevance when applying Prometheus to the core ranking algorithm.

But generative AI can also make things up. The Google Instant study mentioned in the screenshot above is not real. The answer is factually inaccurate, even though it makes sense in the context.

Users might forgive inaccurate results once or twice since web results can also deliver wrong answers, but too many hallucinations quickly erode trust and usage.

A way to minimize the risk of hallucination and misinformation is matching AI answers against web results, which apparently already happen.

I wondered why AI results cite websites at all since they're trained language models, not information retrieval results, so I asked Sridhar Ramaswamy, co-founder and CEO of Neeva, to explain it to me.

Sridhar says that Neeva uses a technique called Retrieval Augmented Generation (RAG), a hybrid of classic information retrieval and machine learning. With RAG, you can train LLMs (Large Language Models) through documents and "remove" inaccurate results by setting constraints. In plain terms, you can show AI what you want with the ranking score for web pages. That seems to be the same or similar technique Bing uses to make sure Prometheus results are as accurate and relevant as possible.

Lastly, AI answers have to complete the user journey for searchers to not click on web results. That sounds simple when it comes to basic questions like "when does the Superbowl start", which still deliver a terrible user experience in Search today for some queries. It's a lot more complex, however, when it comes to commercial searches (buy, compare, evaluate). AI can help you compare products but doesn't ask you for your credit card details or can tell you about your experience with a product.

Commercial queries are the heart of Google's business because that's where advertisers are willing to bid the most money. And that's where I'm bullish about SEO.

Could AI chatbots make SEO traffic better?

It's likely that AI chatbots in Search cause a decrease in traffic from informational queries, but they don't allow users to buy products or solutions. As a result, SEO traffic as a whole might shrink, but the traffic that still arrives at websites might convert much better because visitors are further along in the user journey.

As a result of better converting and less noisy traffic, SEOs migtht be able to better measure the impact of SEO. Forecasts become more accurate. SEO as a whole gets more leadership buy-in and funding. Chatbots could have a net positive impact on SEO.

How chatbots decide which site to send high-intent traffic to is still unclear. It's possible that SEOs will focus more on getting their brand/site into AI answer in the future, similar to how we optimize for featured snippets today. The underlying RAG model could make it possible, but there are too many unknowns so far. Let's please not make "RAG optimization" a thing.

More AI = more information gaps

A second-order effect of AI chatbots answering informational queries is the loss of impressions and search volume data. Technically, classic web results only get one impression when users have a long interaction with an AI chatbot in search. SEOs would lose impressions from query refinements and follow-up searches. Would search volume increase for every question from users during an interaction or just count the first question?

And what about impressions of brands mentioned in AI answers? We get impressions when our content appears in Featured Snippets today, but we already don't know whether it's from a normal web result or Featured snippet without looking at the search results or 3rd party rank trackers. That information gap might get a lot bigger.

So much is still unclear

In 2018, I wrote about Google's shift from search to answer engine, making the argument that Google will use AI to surface better results. Little did I know it was going to be in the format of a chatbot and shift from answers to conversations. And yet, we can't say with high certainty what the implications of chatbots in search are before they are rolled out.

What we do know is:

Bing chat answers live next to search results, not on top

Neeva still displays search results

Bing citations link to websites

Google intends to keep sending traffic to the open web

Copyright questions are unanswered

Voice search was promised to change search but hasn't