GSC data is 75% incomplete

My analysis of 450M impressions shows Google filters 3/4 of your data. Here is how to measure the gap.

This Memo was sent to 24,218 subscribers. Welcome to +124 new readers!

My findings this week show GSC data is about 75% incomplete, making single-source GSC decisions dangerously unreliable.

Premium subscribers get the scripts I used for bot share and a sample rate to apply to their own data.

Show up everywhere your customers search

Semrush Enterprise empowers you to dominate visibility across both search engines and AI search.

It’s one platform that connects all your data, strategy and teams.

Powerful technical SEO capabilities lay the foundation. Real-time tracking and optimization for both SEO and AI visibility are alongside, purpose built for global scales. Semrush Enterprise is how ambitious brands win every layer of discovery.

Plus, it’s all powered by the industry’s leading search database.

Discover the unfair advantages Semrush Enterprise could unlock for your brand.

1. GSC used to be ground truth

Search Console data used to be the most accurate representation of what happens in the search results. But privacy sampling, bot-inflated impressions, and AI Overview (AIO) distortion suck the reliability out of the data.

Without understanding how your data is filtered and skewed, you risk drawing the wrong conclusions from GSC data.

SEO data has been on a long path of becoming less reliable, starting with Google killing keyword referrer to excluding critical SERP Features from performance results. But 3 key events over the last 12 months topped it off:

January 2025: Google deploys “SearchGuard,” requiring Javascript and (sophisticated) CAPTCHA for anyone looking at search results (turns out, Google uses a lot of advanced signals to differentiate humans from scrapers).

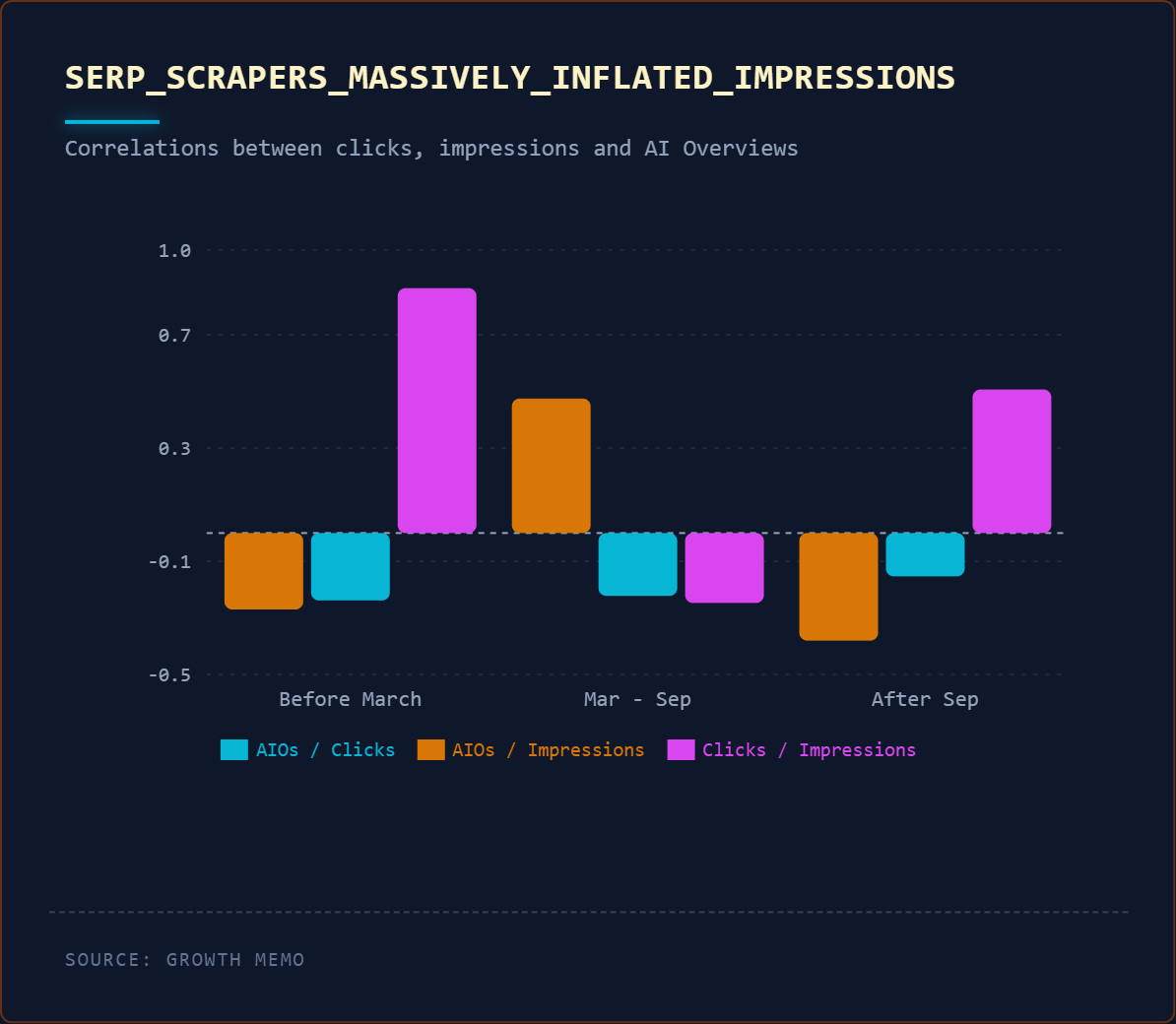

March 2025: Google significantly amps up the number of AI Overviews in the SERPs. We’re seeing a significant spike in impressions and drop in clicks.

September 2025: Google removes num=100 parameter, which SERP scrapers use to parse the search results. The impression spike normalizes, clicks stay down.

On one hand, Google took measures to clean up GSC data. On the other hand, the data still leaves us with more open questions than answers.

2. Privacy sampling hides 75% of queries

Google filters out a significant amount of impressions (and clicks) for “privacy” reasons. One year ago, Patrick Stox analyzed a large dataset and came to the conclusion that almost 50% are filtered out.

I repeated the analysis (10 sites in B2B out of the USA) across ~4 million clicks and ~450 million impressions.

Methodology:

Google Search Console (GSC) provides data through two API endpoints that reveal its filtering behavior. The aggregate query (no dimensions) returns total clicks and impressions, including all data. The query-level query (with ‘query’ dimension) returns only queries meeting Google’s privacy threshold.

By comparing these 2 numbers, you can calculate the filter rate.

For example, if aggregate data shows 4,205 clicks but query-level data only shows 1,937 visible clicks, Google filtered 2,268 clicks (53.94%).

I analyzed 10 B2B SaaS sites (~4 million clicks, ~450 million impressions), comparing 30-day, 90-day, and 12-month periods against the same analysis from 12 months prior.

My conclusion:

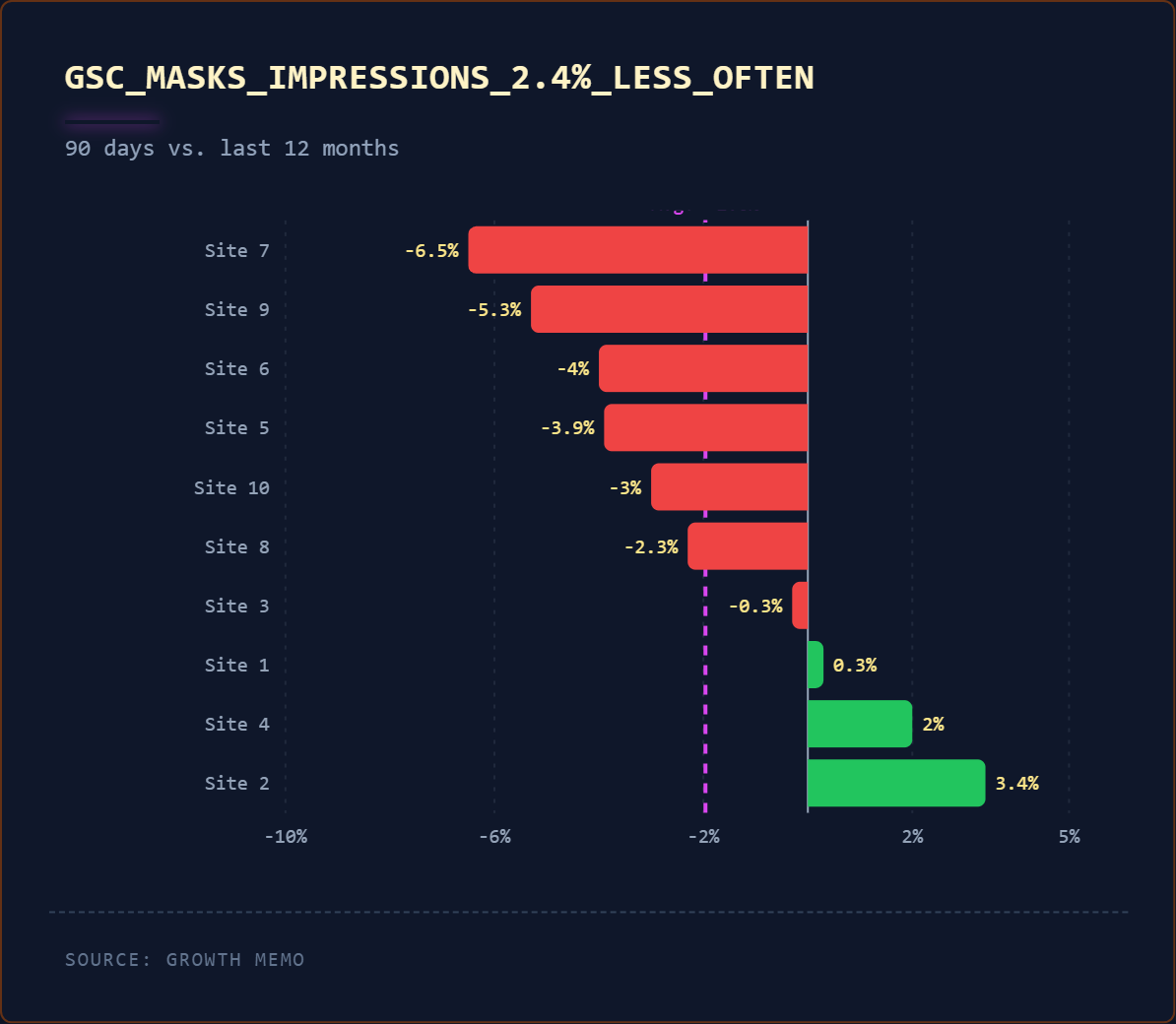

1/ Google filters out ~75% of impressions

The filter rate on impressions is incredibly high, with ¾ filtered for privacy.

12 months ago, the rate was only 2 percentage points higher.

The range I observed went from 59.3% all the way up to 93.6%.

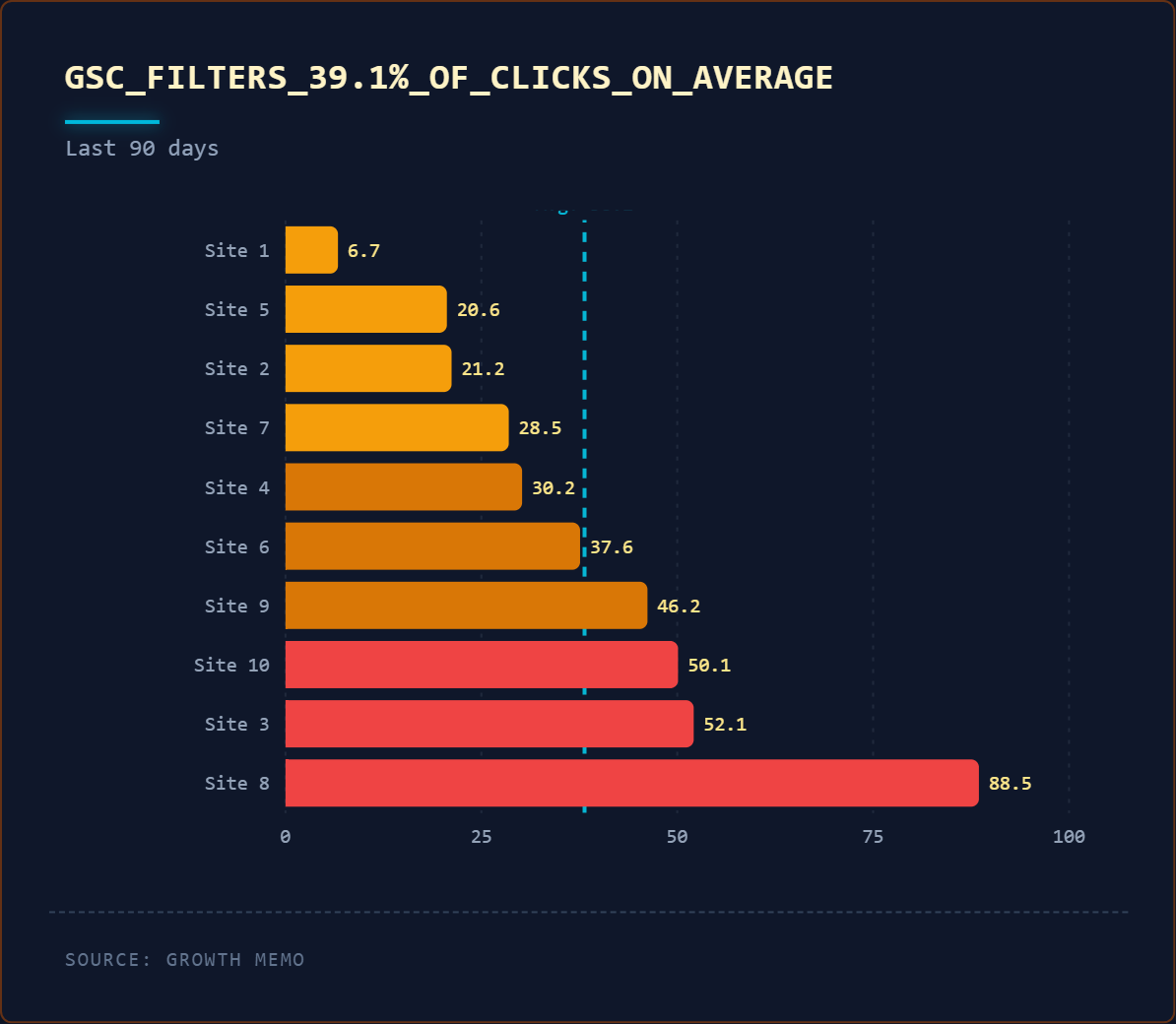

2/ Google filters out ~38% of clicks, but ~5% less than 12 months ago

Click filtering is not something we talk about a lot, but it seems Google doesn’t report up to one-third of all clicks that happened.

12 months ago, Google filtered out over 40% of clicks.

The range of filtering spans from 6.7% to 88.5%!

The good news is that the filter rate has gone slightly down over the last 12 months, probably as a result of fewer “bot impressions.”

The bad news: The core problem persists. Even with these improvements, 38% click-filtering and 75% impression-filtering remain catastrophically high. A 5% improvement doesn’t make single-source GSC decisions reliable when 3/4 of your impression data is missing.

Premium subscribers get the script I used to do this analysis so they understand how much of their own data is filtered out.

3. 2025 impressions are highly inflated

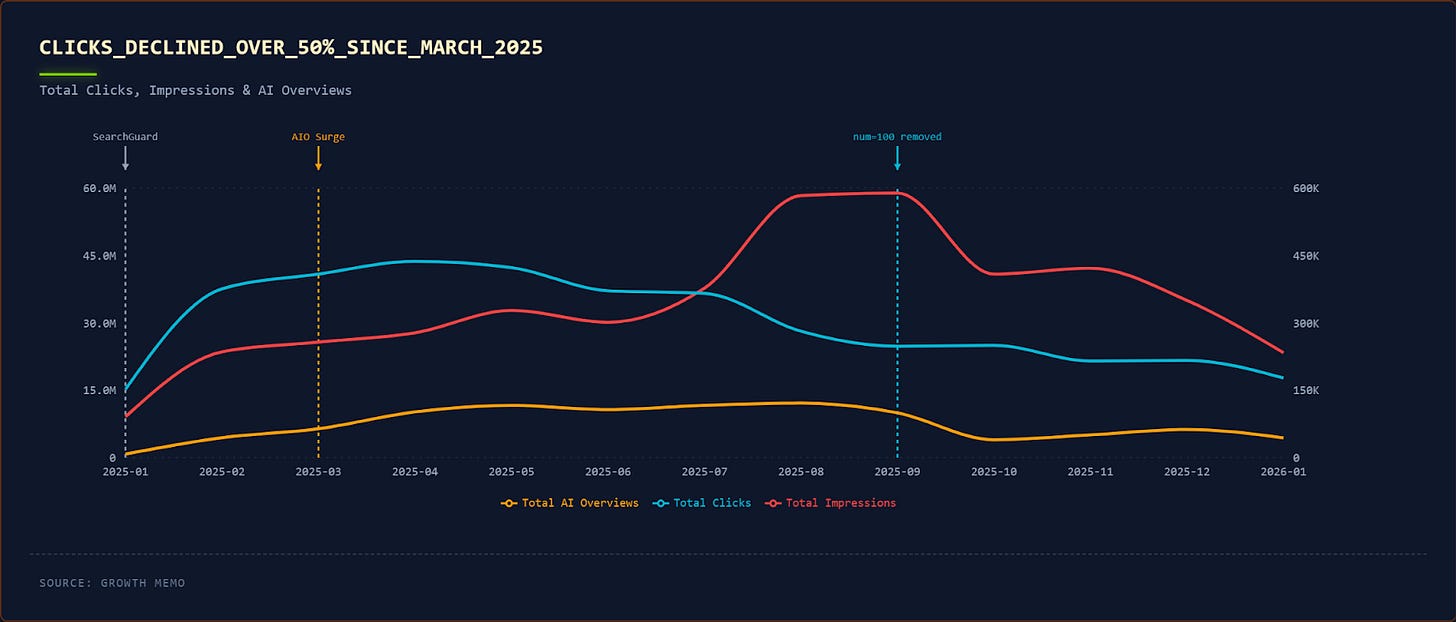

The last 12 months show a rollercoaster of GSC data:

In March 2025, Google intensified the rollout of AIOs and showed 58% more for the sites I analyzed.

In July, impressions grew by 25.3% and by another 54.6% in August. SERP scrapers somehow found a way around SearchGuard (the protection “bot” that Google uses to prevent SERP scrapers) and caused “bot impressions” to capture AIOs.

In September, Google removed the num=100 parameter, which caused impressions to drop by 30.6%.

Fast forward to today:

Clicks decreased by 56.6% since March 2025

Impressions normalized (down -9.2%)

AIOs reduced by 31.3%

I cannot come to a causative number of reduced clicks from AIOs, but the correlation is strong: 0.608. We know AIOs reduce clicks (makes logical sense), but we don’t know exactly how much. To figure that out, I’d have to measure CTR for queries before and after an AIO shows up.

But how do you know click decline is due to an AIO and not just poor content quality or content decay?

Look for temporal correlation:

Track when your clicks dropped against Google’s AIO rollout timeline (March 2025 spike). Poor content quality shows gradual decline; AIO impact is sharp and query-specific.

Cross-reference with position data. If rankings hold steady while clicks drop, that signals AIO cannibalization. Check if the affected queries are informational (AIO-prone) vs. transactional (AIO-resistant). Your 0.608 correlation coefficient between AIO presence and click reduction supports this diagnostic approach.

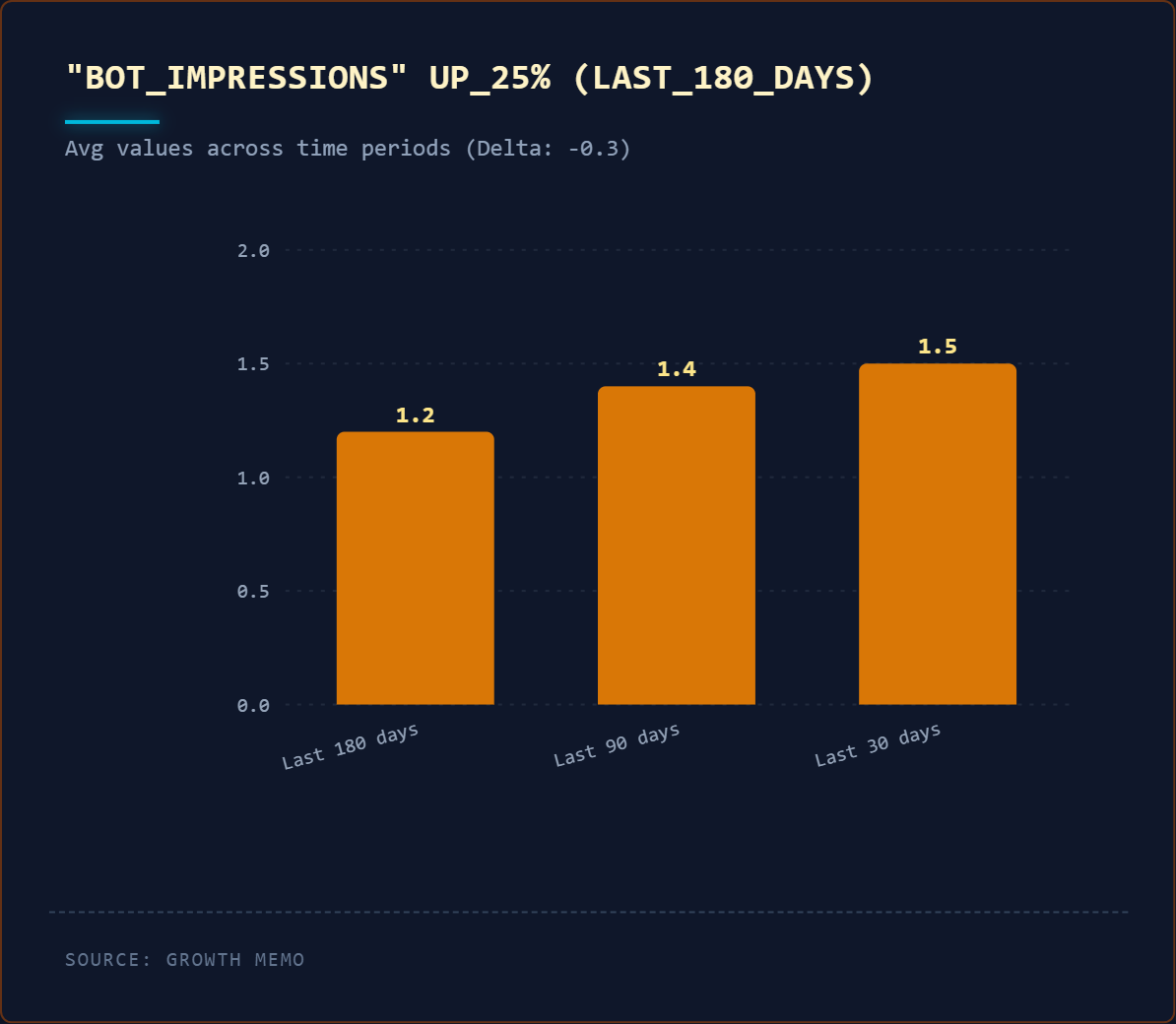

4. Bot impressions are rising

I have reason to believe that SERP scrapers are coming back. We can measure the amount of impressions likely caused by bots by filtering out GSC data by queries that contain more than 10 words and 2 impressions. The chance that such a long query (prompt) is used by a human twice is close to 0.

The logic of bot impressions:

Hypothesis: Humans rarely search for the exact same 5+ word query twice in a short window.

Filter: Identify queries with 10+ words that have >1 impression but 0 clicks.

Caveat: This method may capture some legitimate zero-click queries, but provides a directional estimate of bot activity.

I compared those queries over the last 30, 90, and 180 days:

Queries with +10 words and +1 impression grew by 25% over the last 180 days

The range of bot impressions spans from 0.2% to 6.5% (last 30 days)

Here’s what you can anticipate as a “normal” percentage of bot impressions for a typical SaaS site:

Based on the 10-site B2B dataset, bot impressions range from 0.2% to 6.5% over 30 days, with queries containing 10+ words and 2+ impressions but 0 clicks.

For SaaS specifically, expect 1-3% baseline for bot impressions. Sites with extensive documentation, technical guides, or programmatic SEO pages trend higher (4-6%).

The 25% growth over 180 days suggests scrapers are adapting post-SearchGuard. Monitor your percentile position within this range more than the absolute number.

Bot impressions do not affect your actual rankings - just your reporting by inflating impression counts. The practical impact? Misallocated resources if you optimize for inflated impression queries that humans never search for.

5. The measurement layer is broken

Single-source decisions based on GSC data alone become dangerous:

3/4 of impressions are filtered

Bot impressions generate up to 6.5% of data

AIOs reduce clicks by over 50%

User behavior is structurally changing

Your opportunity is in the methodology: Teams that build robust measurement frameworks (sampling rate scripts, bot-share calculations, multi-source triangulation) have a competitive advantage.

Premium: Scripts for data sampling and long query tracking

The scripts below let you quantify how much of your GSC data is noise and pinpoint where GSC impressions stop being reliable. If you’re still forecasting, prioritizing, or making strategic calls off raw GSC impressions, this is how you correct the signal before it derails your decision-making.