Growth Intelligence Brief #13

Google makes AI changes to the SERPs, Chrome and shopping at rapid pace. We're getting more telemetry but still miss key numbers (AIOs, *cough* *cough*). Also: which sites are already winning 2026?

Welcome to another Growth Intelligence Brief, where organic growth leaders discover what matters - getting insights into the bigger picture and guidance on how to stay ahead of the competition.

As a free subscriber, you’re getting the first big story. Premium subscribers get the whole brief.

Today’s Growth Intelligence Brief went out to 534 (+28) marketing leaders.

This week, we’re looking at the infrastructure of the Agentic Web coming together across major Search and Commerce updates from Google and OpenAI, and how Microsoft Clarity finally lets us track AI agent visitors.

I’ll also connect the dots on what this all means for you.

Microsoft Clarity is offering us some clarity

Here’s what happened:

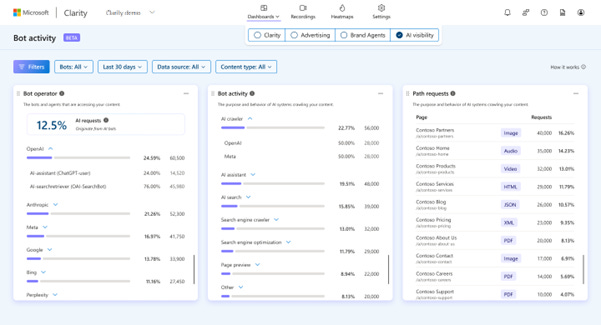

Microsoft Clarity introduced reporting that shows AI bot traffic and activity across websites, offering more transparency into how automated agents crawl and interact with content. (And Growth Memo readers everywhere rejoiced!)

The new “Bot Activity” report is a dashboard that tracks server-side signals to show exactly how AI agents access your site.

Unlike standard analytics that track human visits, this requires a CDN or server integration to capture the “upstream” activity, meaning the scraping and crawling that happens before a user ever sees an answer.

The report breaks down traffic by “Bot Operator” (e.g., OpenAI, Anthropic, Google) and, crucially, “Bot Activity” type, distinguishing between an “AI Crawler” (scraping for training data) and an “AI Assistant” (fetching a live answer for a user).

Why this news matters:

AI bots don’t behave like search bots; they don’t just index content, they consume it. What was once hidden in complex server logs is now visible, letting us easily track whether an AI is reading our site 10,000 times a day or ignoring it completely.

This dashboard democratizes the “AI Request Share” metric, allowing us to quantify how much of our infrastructure is serving non-human agents versus actual customers without needing a data science team. It effectively separates “Training” (extractive) from “Inference” (potential visibility).

My take on this:

It’s okay not to get traffic. That’s the new reality we have to accept. The real breakthrough here is that now it’s much easier to know whether an AI actually used your content as the basis for those answers.

Previously, “Zero Click” was a black box; we had to guess if our content was fueling the AI’s response or if we were just being ignored. Now, we have proof. If you see high AI consumption of your content, you know you are winning mindshare and influencing the answer, even if you aren’t getting the click. This metric finally validates the strategy of “feeding the bot” to maintain brand relevance in a world where the user might never leave the chat interface.

Here’s what to do:

Enable Server-Side Integration: You cannot get this data with just the JavaScript snippet. Connect your CDN (Cloudflare, etc.) to Clarity to see the server logs.

Audit “Path Requests”: Identify what they are reading. Are they scraping your high-value proprietary data (pricing, JSON endpoints) or your brand-building content (blog, about page)?

Calculate your “AI Conversion Rate”: Compare your AI Request Volume (from Clarity) to your AI Referral Traffic (from GA4). If the ratio is massively skewed, you need to rethink your content strategy for agents.