A warm welcome to 148 new Growth Memo readers who joined us since last week! Join the ranks of Amazon, Microsoft, Google and 12,500 other Growth Memo readers:

How North Star Inbound Earns 2800 High Authority Links with Just 17 Campaigns

With just 17 campaigns, we earned over 2800 placements, including in The Guardian, Time Magazine, CBS News, Daily Mail, NPR, Tripadvisor, LA Times, and Investopedia.

By focusing on relatable stories told with big data, we set our work apart and provide consistent results for clients again and again.

Email [email protected] to get the case study!

Over the last week, I observed many arguments against digging deep into the 2,595 pages. But the only question we should ask ourselves is, “How can I test and learn as much as possible from these documents?” SEO is an applied science where theory is not the end goal but the basis for experiments.

14,000 test ideas

You couldn’t ask for a better breeding ground for test ideas. But, we cannot test every factor the same way. They have different types (number/integer: range, Boolean: yes/no, string: word/list) and reaction times (meaning the speed at which they lead to a change in organic rank). As a result, we can a/b test fast and active factors while we have to before/after test slow and passive ones.

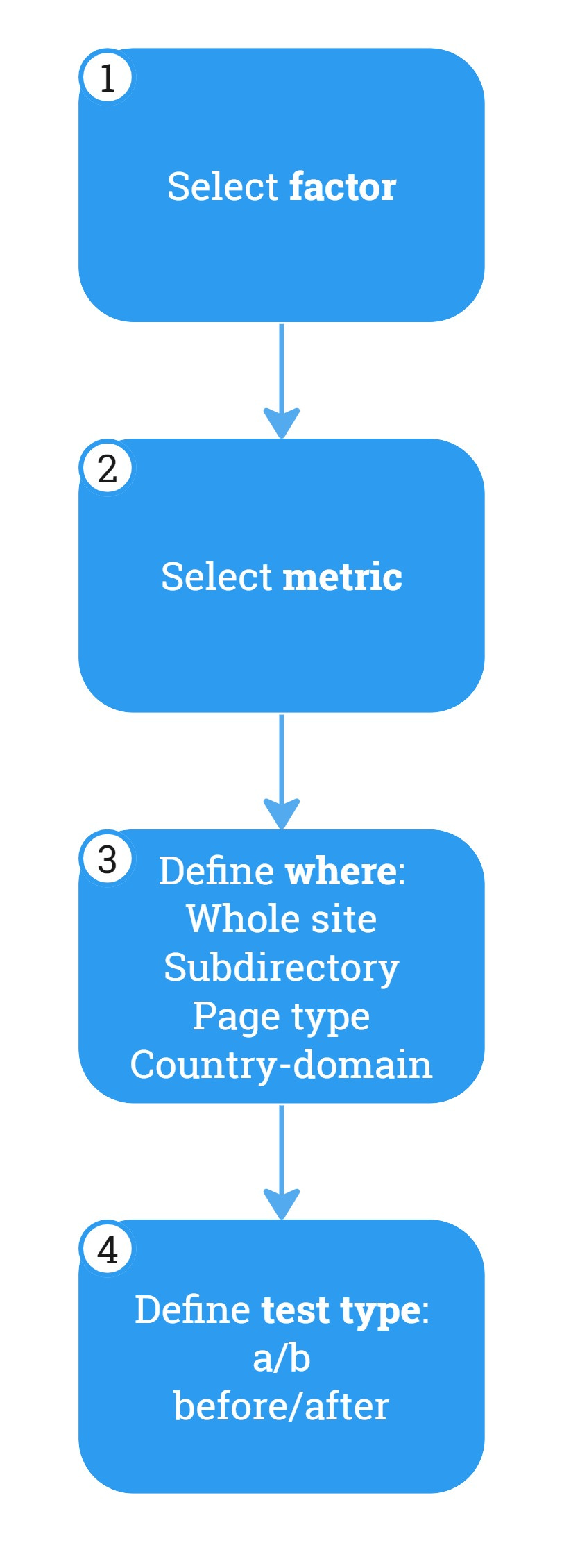

Test ranking factors systematically by:

Selecting a ranking factor

Selecting the impacted (success) metric

Define where you test

Define the type of test

Ranking factors

Most ranking factors in the leak are integers, meaning they work on a spectrum, but some Boolean factors are easy to test:

Image compression: yes/no?

Intrusive interstitials: yes/no?

Core Web Vitals: yes/no?

Factors you can directly control:

UX (navigation, font size, line spacing, image quality)

Content (fresh, optimized titles, not duplicative, rich in relevant entities, focus on one user intent, high effort, crediting original sources, using canonical forms of a word instead of slang, high UGC quality, expert author)

User engagement (high rate of task completion)

Demoting (negative) ranking factors:

Links from low-quality pages and domains

Aggressive anchor text (unless you have an extremely strong link profile)

Poor navigation

Poor user signals

Factors you can only influence passively:

Title match and relevance between source and linked document

Link clicks

Links from new and trusted pages

Domain authority

Brand mentions

Homepage PageRank

Start with an assessment of your performance in the area you want to test in. A straightforward use case would be Core Web Vitals.

Metrics

Pick the right metric for the right factor based on the description in the leaked document or your understanding of how a factor might impact a metric:

Crawl rate

Indexing (yes/no)

Rank (for main keyword)

CTR

Engagement

keywords a page ranks for

Organic clicks

Impressions

Rich snippets

Where to test

Find the right place to test:

If you’re skeptical, use a country-specific domain or a site where you can test with low risk. If you have a site in many languages, you can roll out changes based on the leaks in one country and compare relative performance against your core country.

You can limit tests to one page type or subdirectory to isolate the impact as good as you can.

Limit tests to pages addressing a specific type of keyword (e.g. “best x”) or user intent (eg ”read reviews”).

Some ranking factors are sitewide signals, like site authority, and others are page-specific, like click-through rates.

Considerations

Ranking factors can work with or against each other since they’re part of an equation. Humans are notoriously bad at intuitively understanding functions with many variables, which means we most likely underestimate how much goes into achieving a high rank score but also how a few variables can significantly impact the outcome. High complexity of the relationship between ranking factors shouldn’t keep us from experimenting.

Aggregators can test easier than Integrators because they have more comparable pages that lead to more significant outcomes. Integrators, which have to create content themselves, have differences between every page that dilute test results.

My favorite test: One of the best things you can do for your understanding of SEO is scoring ranking factors by your own perception and then systematically challenge and test your assumptions. Create a spreadsheet with each ranking factor, give it a number between 0 and 1 based your idea of its importance, and multiply all factors.

Monitoring systems

Testing only gives us an initial answer to the importance of ranking factors. Monitoring allows us to measure relationships over time and come to more robust conclusions. The idea is to track metrics that reflect rankings factors, like CTR could reflect title optimization, and chart them over time to see whether optimization bears fruit. The idea no different from regular (or what should be regular) monitoring, except for new metrics.

You can build monitoring systems in

Looker

Amplitude

Mixpanel

Tableau

Domo

Geckoboard

GoodData

Power BI

The tool is not as important as the right metrics and URL path.

Example metrics

Measure metrics by page type or a set of URLs over time to measure the impact of optimizations. Note: I’m using thresholds based on my personal experience that you should challenge.

User engagement:

Average number of clicks on navigation

Average Scroll depth

CTR (SERP to site)

Backlink quality:

% of links with high topic-fit / title-fit between source and target

% of links of pages that are younger than 1 year

% of links from pages that rank for at least one keyword in the top 10

Page quality:

Avg. dwell time (compared between pages of the same type)

% users who spend at least 30 seconds on the site

% of pages that rank in the top 3 for their target keyword

Site quality:

% of pages that drive organic traffic

% of zero-click URLs over the last 90 days

Ratio between indexed and non-indexed pages

It’s ironic that the leak happened shortly after Google started showing AI for results (AIOs) because we can use AI to find SEO gaps based on the leak. One example is title matching between source and target for backlinks. With common SEO tools, we can pull titles, anchor text and surrounding content of the link for referring and target pages.

We can then rate the topical proximity or token overlap with common AI tools, Google Sheets/Excel integrations or local LLMs and basic prompts like “rate the topical proximity of the title (column B) compared to the anchor (column C) on a scale of 1 to 10 with 10 being exactly the same and 1 having no relationship at all.”

A leak of their own

Google’s ranking factor leak isn’t the first time the inner works of a big platform algorithm became available to the public:

1/ In January 2023, a Yandex leak revealed many ranking factors that we also found in the latest Google leak. The underwhelming reaction surprised me just as much back then as today.

2/ In March 2023, Twitter published most parts of its algorithm. Similar to the Google leak, it lacks “context” between the factors, but it was insightful nonetheless.1

3/ Also in March 2023, Instagram’s chief Adam Mosseri published an in depth follow-up post to how the platform ranks content in different parts of its product.2

Despite the leaks, there are no known cases of a user or brand hacking the platform in a clean, ethical way. The more a platform rewards engagement in its algorithm, the harder it is to game. And yet, the Google algorithm leak is quite interesting because it’s an intent-driven platform where users indicate their interest through searches instead of behavior. As a result, knowing the ingredients to the cake is a big step forward, even without knowing how much of each to use.

I cannot understand why Google has been so secret about ranking factors all along. I’m not saying they should have published them in the degree of the leak. They could have incentivized a better web with fast, easy-to-navigate, good-looking, informative sites. Instead, they left people guessing too much, which led to a lot of poor content, which led to algorithm updates that cost many businesses a lot of money.

I agree 🎒 on your last paragraph. Google seems evil?